Hi! I am a third year PhD student at Robotics Institute of Carnegie Mellon University, advised by Prof. Deva Ramanan. I did my undergrad in Computer Science and Maths at Cornell University and served as college symbol bearer (top 5 of the college). My current research focuses on computer vision and learning, especially robustness to distribution shifts (continual/lifelong vision) and data-efficient adaptation with multi-modalities.

🔥 News

- 2023.09: My recent work Revisiting the Role of Language Priors in Vision-Language Models demonstrates top-tier performance across recent vision-language benchmarks like ARO/SugarCrepe/Winoground.

- 2023.04: I will be interning at Meta GenAI this summer on vision-language models.

- 2023.02: Multimodality Helps Unimodality: Cross-Modal Few-Shot Learning with Multimodal Models accepted by CVPR’23.

- 2022.09: LECO: Continual Learning with Evolving Class Ontologies was accepted by NeurIPS’22. Check out the website and slides for a quick overview!

- 2022.06: The 1st CLEAR Challenge was hosted on CVPR’22 2nd Workshop on Open World Vision. Check out the slides for a quick overview!

- 2021.09: The CLEAR Benchmark: Continual LEArning on Real-World Imagery accepted by NeurIPS’21 (Datasets and Benchmarks Track).

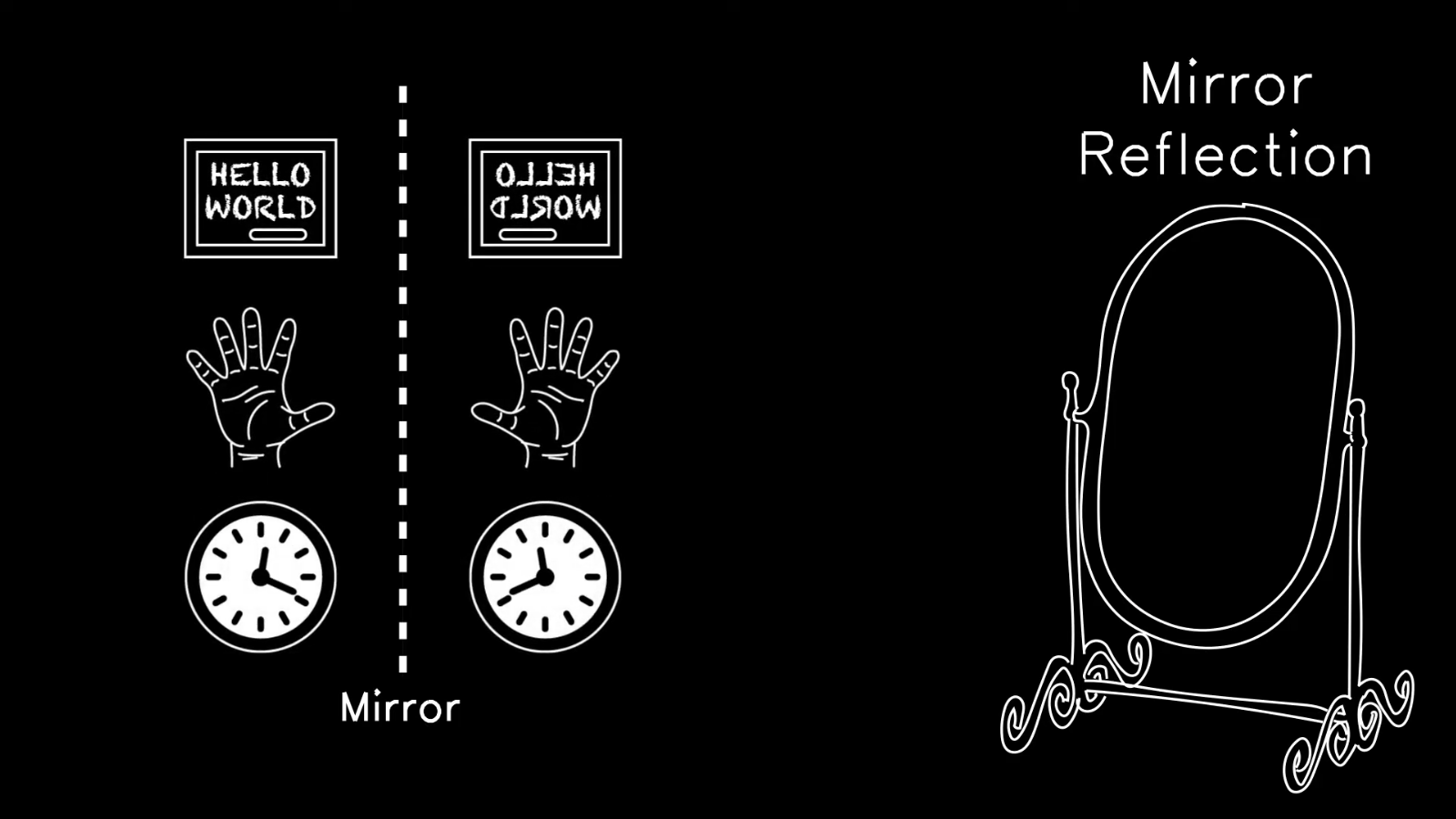

- 2020.06: Best Paper Nomination at CVPR’20 for Visual Chirality!

📝 Publications

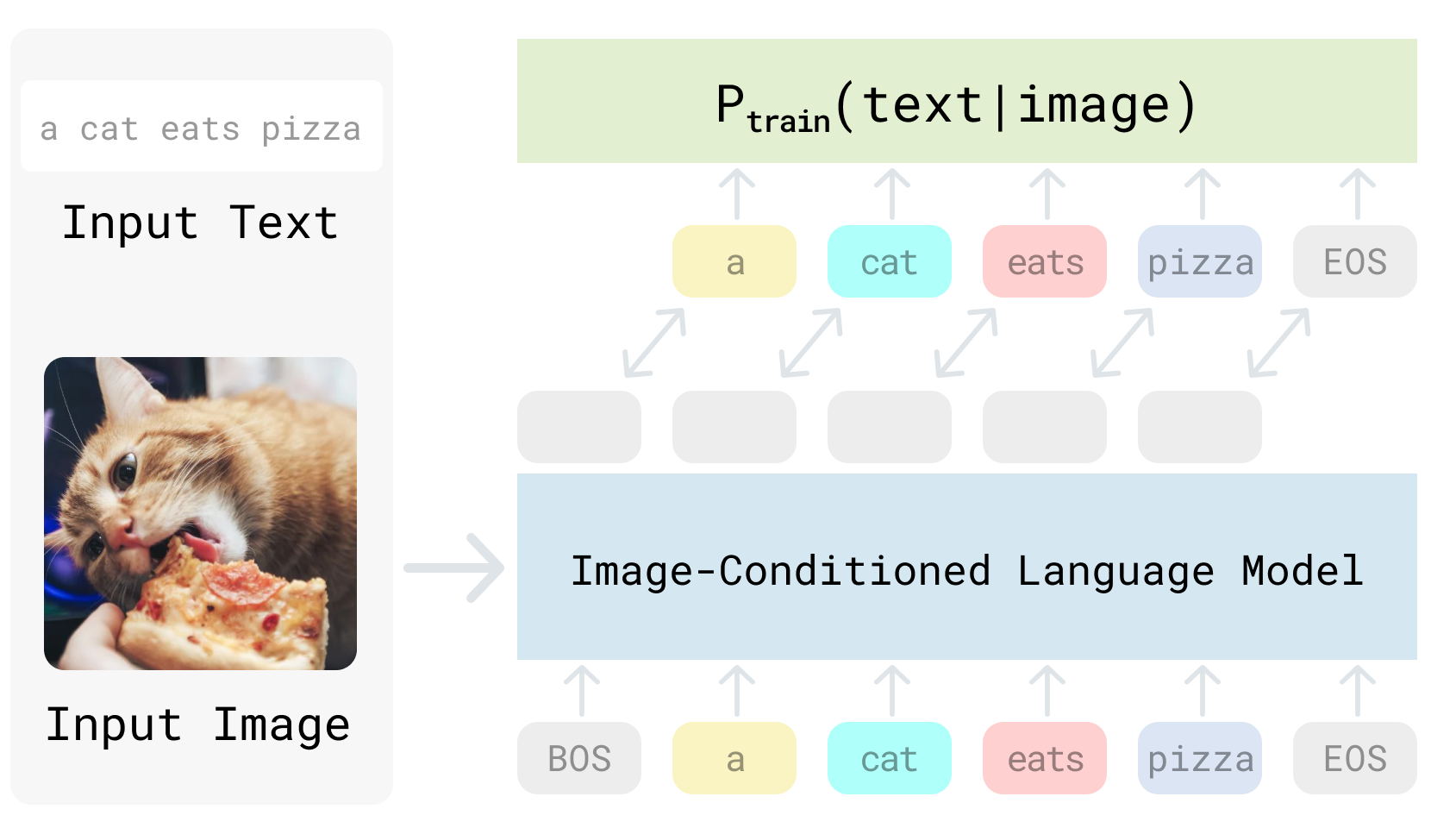

Revisiting the Role of Language Priors in Vision-Language Models (VisualGPTScore)

Zhiqiu Lin*, Xinyue Chen*, Deepak Pathak, Pengchuan Zhang, Deva Ramanan

- We use generative VLMs to implement Visual Generative Pre-Training Score (VisualGPTScore), i.e., the probablity score of generating a text given an image.

- Such a generative score achieves top-tier image-text retrieval performance on multiple compositionality benchmarks, surpassing all discriminative approaches by a great margin.

- We further investigate the role of language prior P(text) through a probablistic lens, and introduce a debiasing solution that consistently improves the VisualGPTScore under train-test distribution shifts over text.

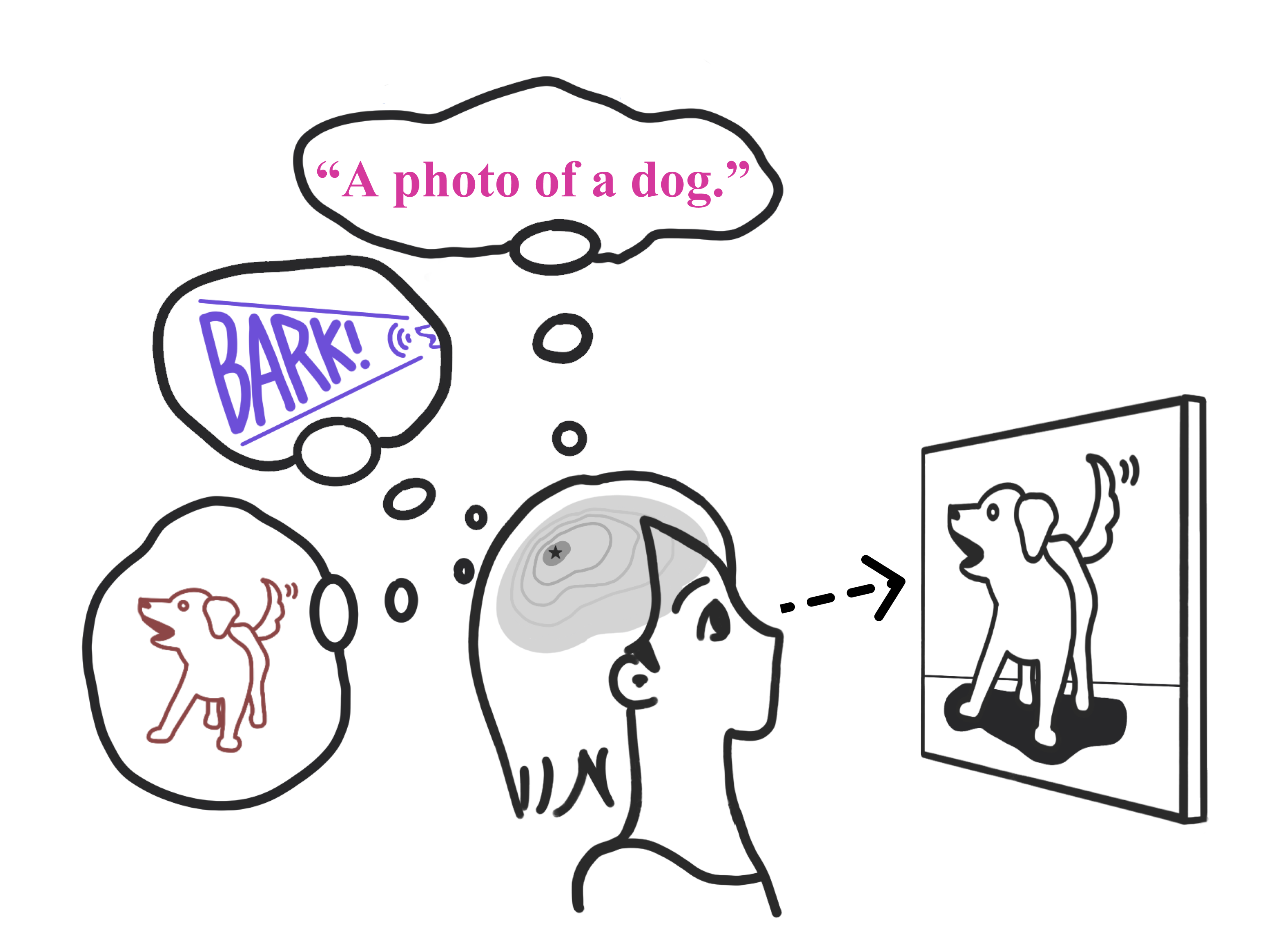

Multimodality Helps Unimodality: Cross-Modal Few-Shot Learning with Multimodal Models

Zhiqiu Lin*, Samuel Yu*, Zhiyi Kuang, Deepak Pathak, Deva Ramanan

- We propose a simple cross-modal adaptation method for multimodal models that repurposes information from other modalities (e.g., class names and audio clips) as additional training samples.

- For CLIP, it achieves SOTA few-shot adaptation performance even with a simple linear probe, and consistently improves prior art such as prompting, adapter, and weight ensembling.

- Audiovisual experiments with AudioCLIP suggest that one can learn a better dog visual classifier by listening to them bark.

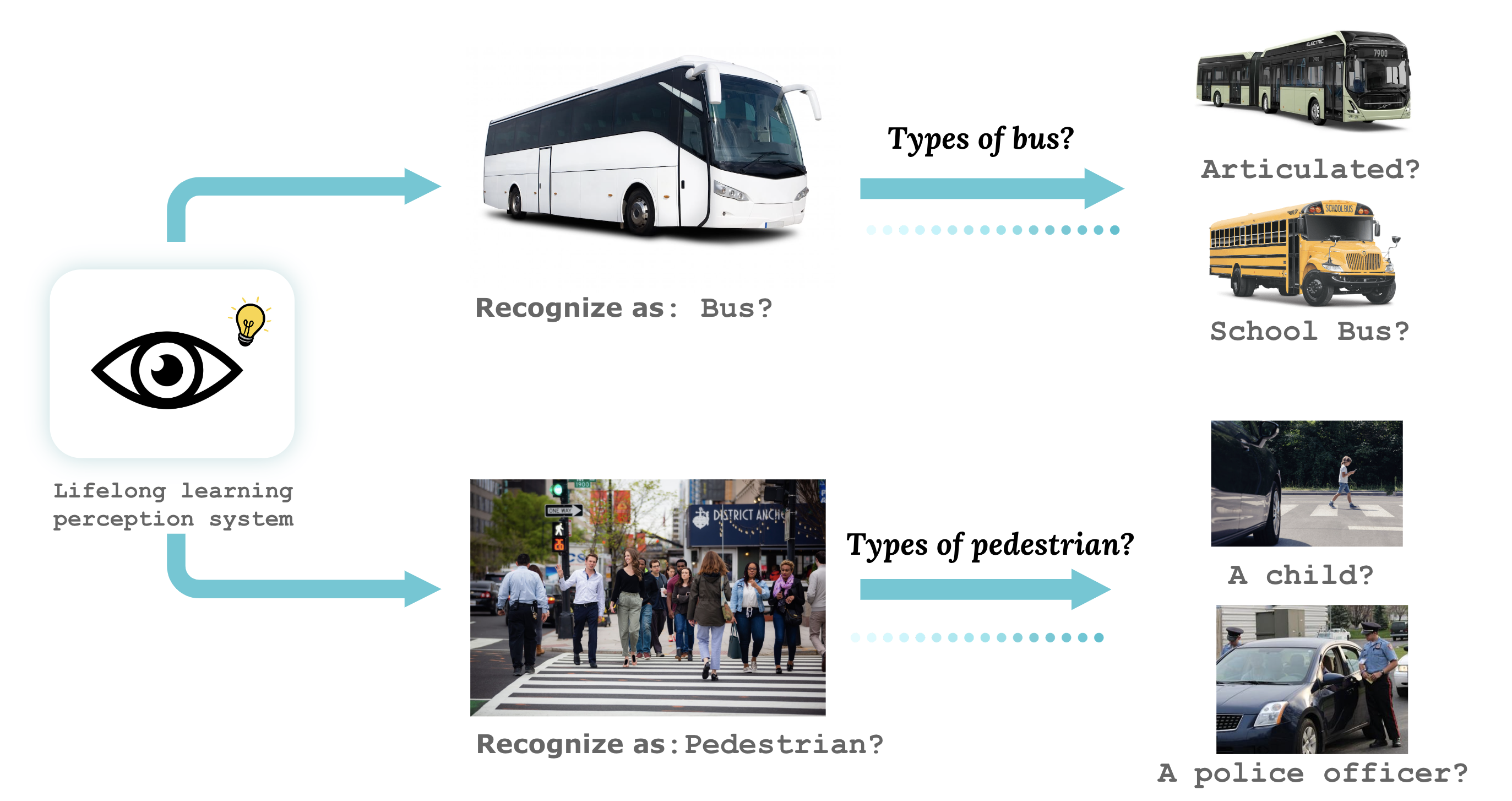

LECO: Continual Learning with Evolving Class Ontologies

Zhiqiu Lin, Deepak Pathak, Yu-Xiong Wang, Deva Ramanan*, Shu Kong*

Website | Arxiv | NeurIPS’22 Talk

- A practical lifelong vision benchmark motivated by real-world dataset versioning issues, e.g., Mapillary 1.2 to 2.0.

- Simple but effective solutions such as joint training, semi-supervised learning, and learning-with-partial-labels to address inconsistent annotation (both coarse-grained and fine-grained).

The CLEAR Benchmark: Continual LEArning on Real-World Imagery

Zhiqiu Lin, Jia Shi, Deepak Pathak*, Deva Ramanan*

CLEAR Wiki | NeurIPS Paper Site | Arxiv | CVPR’22 Talk

- The first continual benchmark for visual recognition with natural distribution shifts over a decade!

- CLEAR has a 10- and 100-classes version (download links), similar to the famous CIFAR-10 and CIFAR-100 benchmarks.

- 1st CLEAR challenge was hosted on June 19th, 2022. We have 79 participants from 21 different countries and regions signed up for the challenge!

- QPyTorch: A Low-Precision Arithmetic Simulation Framework.

Tianyi Zhang, Zhiqiu Lin, Guandao Yang, Chris De Sa, NeurIPS 2019 Workshop @ EMC2 |

- What.Hack: Engaging Anti-Phishing Training Through a Role-playing Cyber Defense Simulation Game. Zikai Alex Wen, Zhiqiu Lin, Rowena Chen, Erik Andersen, CHI 2019

🎖 Honors and Awards

- 2020.06 Best Paper Nomination at CVPR’20 for Visual Chirality!

- 2020.05 Graduated Summa Cum Laude in Computer Science and Mathematics from Cornell University, and served as college symbol bearer (top 5 of the college).

📖 Educations

- 2020.09 - (now), PhD student, Carnegie Mellon University.

- 2016.09 - 2020.06, Undergraduate, Cornell University.

💬 Invited Talks

- 2022.06, I presented CLEAR Benchmark on CVPR’22 2nd Workshop on Open World Vision.

💻 Services

- Organizer: CVPR’22 VPLOW Workshop (Challenge Track)

- Reviewer: ECCV, CVPR (Outstanding reviewer), ICCV, NeurIPS, ICML.

- Teaching (CMU): Learning-based Image Synthesis and Advanced Computer Vision

- Teaching (Cornell): Advanced Machine Learning, Cornell Tech Pre-Master Program, Functional Programming, Algorithm Analysis, Data Structures, Computer Vision